1. GBDS 4 Architecture Guide¶

1.1. Overview¶

GBDS is the ABIS component of the Griaule Biometric Suite. GBDS 4 is implemented over the Apache Hadoop 3 framework and uses many of its tools and components (such as Kafka, Zookeeper, Ambari, HBase, and HDFS) to implement a scalable, distributed, and fault-tolerant ABIS (Automated Biometric Identification System).

GBDS is responsible for:

- Storage: Persisting biometric records, client requests, and responses, operation results, and logs in a scalable, fault-tolerant, distributed database.

- Extraction: Processing raw biometric data to compute templates that will be used for biometric matching

- Matching: Performing biometric matching efficiently by distributing the load over a collection of hosts (nodes).

- Request Processing: GBDS receives requests from clients, manages their execution within the system nodes, and asynchronously notifies clients when responses become available. Requests are performed through an HTTP/HTTPS API. GBDS implements a high-availability, high-throughput endpoint for client requests.

This document describes the GBDS 4 architecture.

1.2. Hadoop Components¶

Apache Hadoop 3 is a collection of open-source tools and components for the development of distributed systems. Hadoop is based in Java, a technology present in over 13 billion devices. Hadoop development started in 2006, and it soon became the de facto standard for high-availability, fault-tolerant distributed systems. As of 2013, Hadoop was already present in more than half of the Fortune 50 companies.

GBDS uses several tools and components from the Apache Hadoop 3 ecosystem:

- HDFS is a distributed, scalable and portable filesystem. HDFS provides transparent data distribution between storage nodes and efficient storage of large files and large collections of files.

- HBase is a distributed non-relational database and is built upon the HDFS filesystem.

- Zookeeper is a distributed key-value store and is used by GBDS as a consensus manager.

- Kafka is a stream processing platform that manages distributed processing queues. Kakfa manages queues called topics, which have content queued by producers and processed by consumers. Kafka efficiently distributes topic workloads among available nodes.

- Ambari is a monitoring and management tool for Hadoop clusters. GBDS system administrators interact and manage their clusters through Ambari.

1.3. Nodes¶

A GBDS cluster is composed of nodes, and each node runs the exact same components: HBase, Zookeeper, Ambari, Kakfa, and the GBDS Node Subsystem itself. All nodes can receive client requests from external applications, and all nodes can send asynchronous notifications to external client applications.

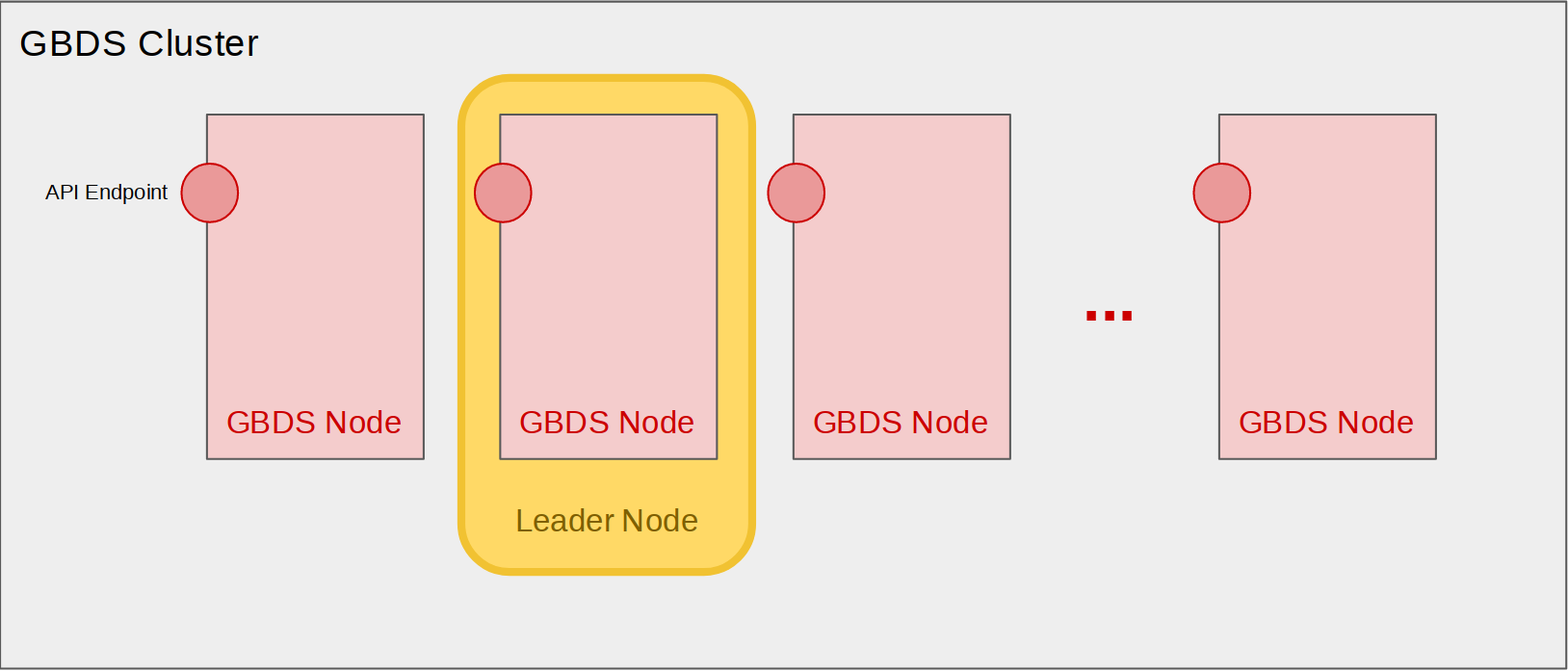

The following diagram shows the general structure of a GBDS cluster, which is a collection of GBDS nodes:

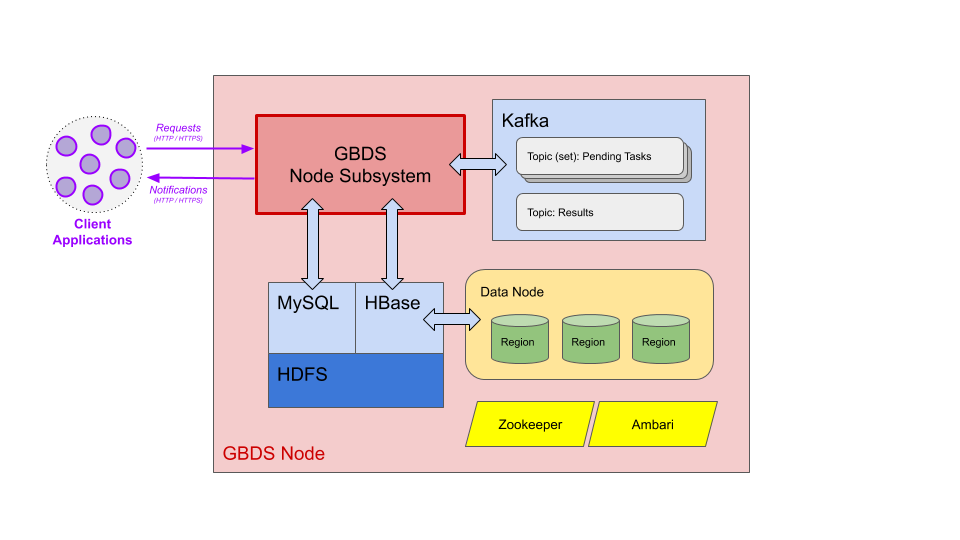

GBDS uses two different databases: MySQL and HBase. Biometric templates are stored in HBase, and data is split into subsets called regions. Each node is responsible for at least one region. Biometric data is transparently split into regions by HBase, based on the hardware capabilities of each node.

The MySQL database stores information about transaction metadata, biometric exceptions, criminal cases, enrolled people profiles, and unsolved latents. The SQL database stores the metadata referencing the HBase register, which contains the higher processing data, such as images and templates.

One node acts as the Leader Node. This node will initialize the cluster and partition the biometric database among the available GBDS nodes. The Leader node is automatically chosen by Zookeeper.

The Kafka component in the cluster manages topics (queues) for pending tasks (to be processed by the cluster) and results (to be delivered asynchronously to clients or GBDS components when tasks are completed). GBDS has several pending task topics, one for each task priority. Tasks in higher priority topics are always consumed before the tasks in lower priority topics. GBDS has 8 priority levels: Lowest, Lower, Low, Default, High, Higher, Highest, and Maximum. Client applications cannot use the Maximum priority, which is reserved for internal GBDS operations. In this manual, the set of pending tasks topics is represented as a single entity.

The diagram below shows the components running in each node:

The GBDS Node Subsystem is responsible for the ABIS logic. It works as both producer and consumer for Kafka topics, and as both consumer of client requests and producer of client notifications.

1.4. GBDS Node Subsystem¶

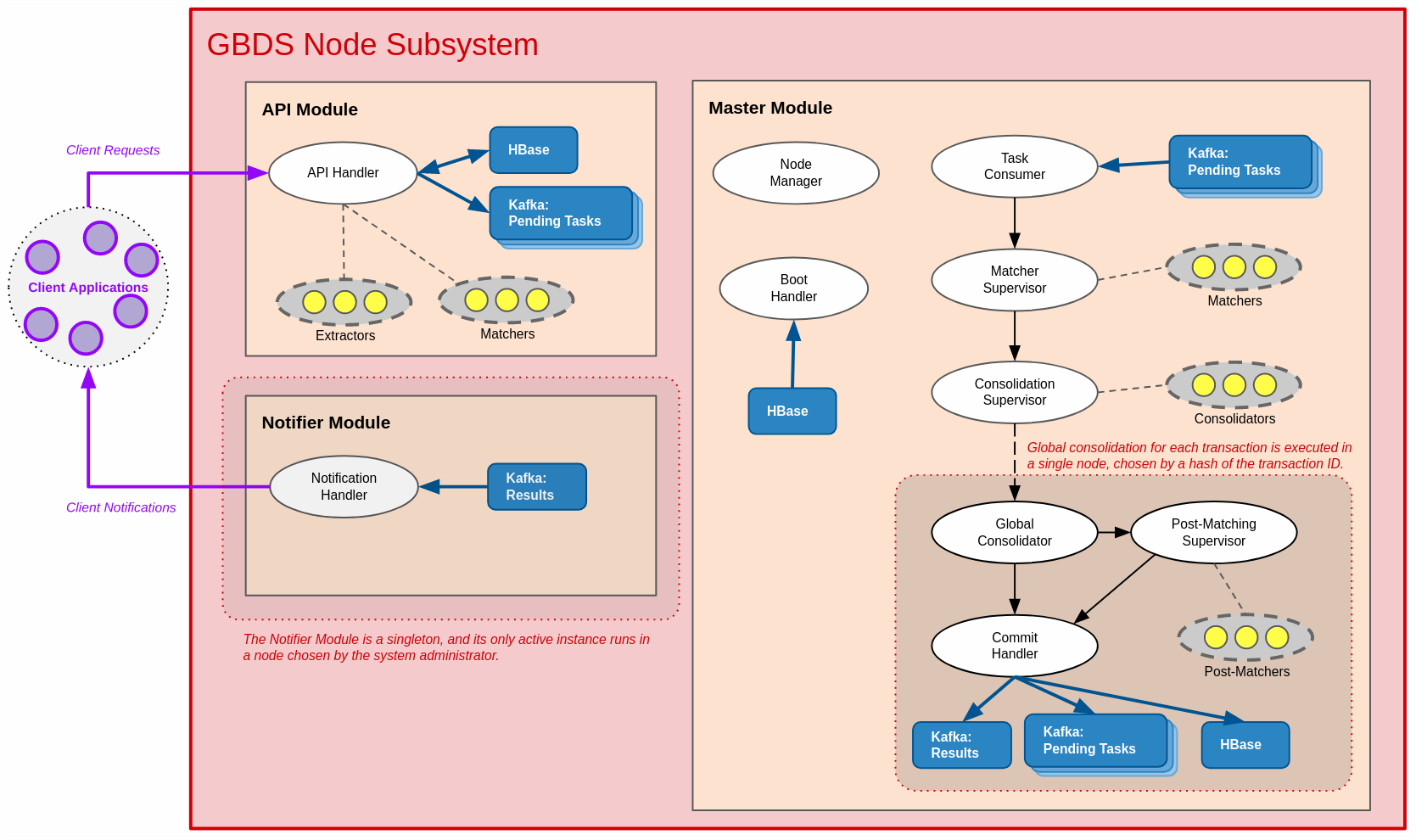

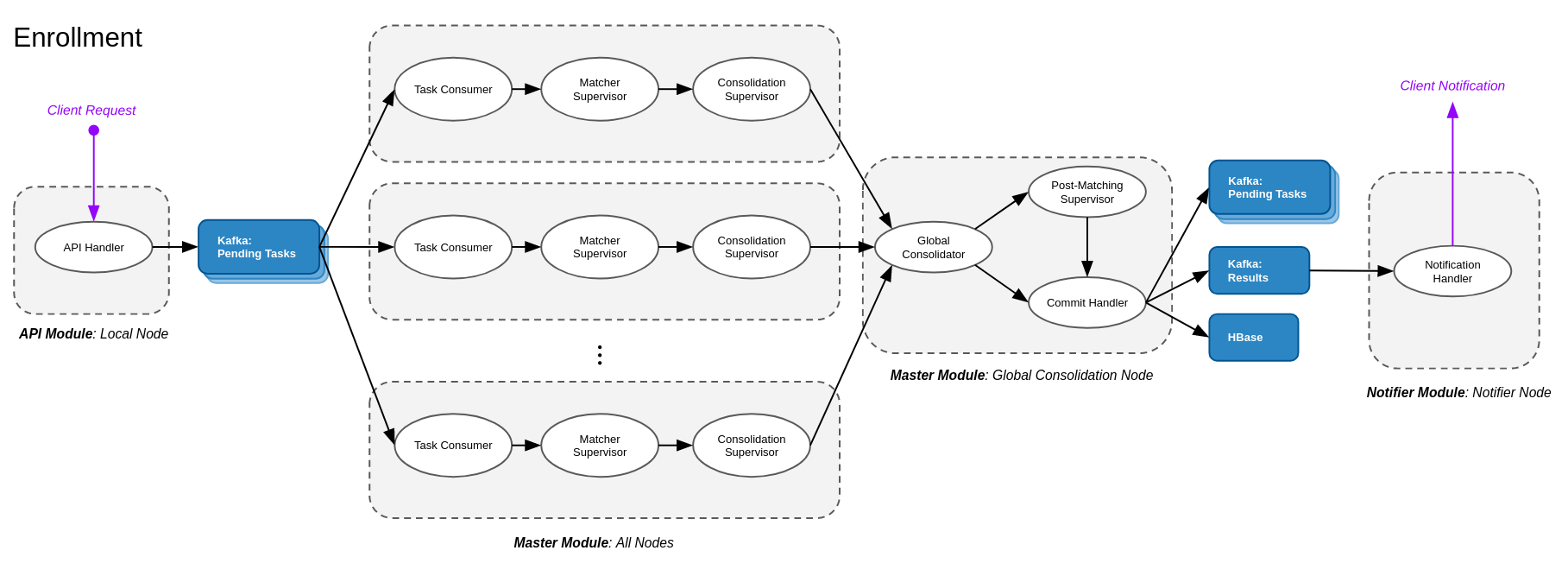

The GBDS Node Subsystem implements the specific workflows for the ABIS operation. It has 3 major internal modules: The API Module, the Master Module, and the Notifier Module. Each of these modules can be started and stopped independently in each node.

The diagram below shows the internal architecture of the GBDS component and the interactions between the components.

- When a client request is received by the API Module, it is either resolved locally or pushed to a Pending Tasks Kafka topic with the appropriate priority.

- The Master Module is responsible for handling fault tolerance, distributing and loading data from database to RAM at boot time, and processing distributed biometric tasks. It continuously consumes pending task items that involve distributed processing.

- When a client request is completed, the results are consolidated by a single specific node, which pushes the output to the Results Kafka topic. The consolidation node where this global consolidation occurs is determined by a hashing function on the unique identifier of the record being processed, which distributes the global consolidations tasks evenly across the cluster.

- The Notifier Module is responsible for consuming items from the Results Kafka topic and sending asynchronous notifications to external client applications. The Notifier Module is a singleton and can only be active in one specific node, chosen by the system administrator.

1.4.1. API Module¶

The API Module has one main component, the API Handler, which receives HTTP/HTTPS requests from external client applications and either 1) processes them locally; or 2) prepares the transaction for cluster processing and pushes it as a task to a Pending Tasks Kafka topic with the appropriate priority, so that it can be processed by the entire cluster.

The API Handler is responsible for performing biometric template extraction. If an incoming request contains raw biometric data (i.e., images instead of biometric templates), this component starts biometric extractor processes and/or threads in the local node to generate biometric templates from the raw biometric data. The choice of processes or threads depends on the biometric modality.

Enrollment and Identification (1:N) requests are pushed to a Pending Tasks Kafka topic and are processed by the entire cluster.

All other requests types are processed locally by the API Handler. Any templates required by these transactions are either extracted locally or fetched from HBase, and client responses are sent synchronously: the Results Kakfa topic and the Notifier Module are not involved.

These other requests can be:

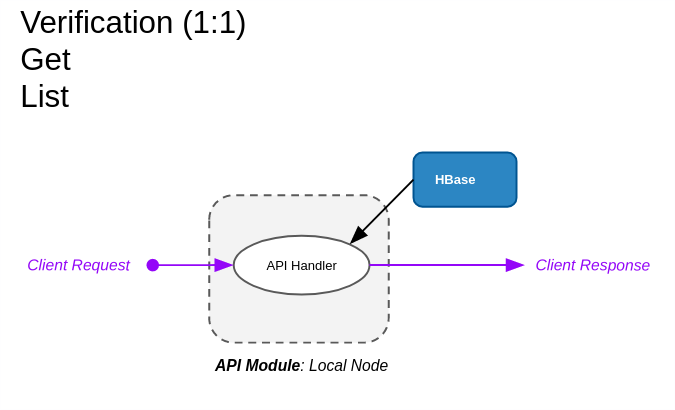

- Verification (1:1): The API Handler extracts the biometric template for the query (if sent as an image), fetches the reference template from HBase, performs biometric matching locally, and replies directly to the client.

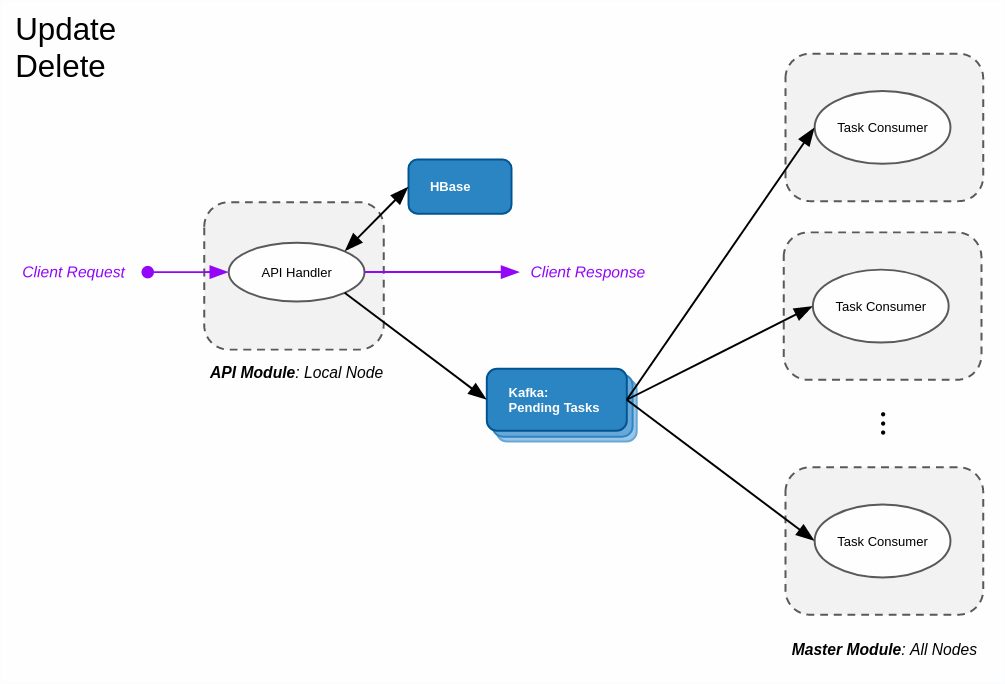

- Update: The API Handler updates the biographic and/or biometric data directly in HBase, and pushes a Pending Task item to Kafka in the Maximum priority topic to force the cluster nodes that have the affected record in RAM to refresh their in-memory records of the modified data from HBase before they start processing other non-Maximum priority tasks.

- Delete: The API Handler deletes the record from HBase, and pushes a Pending Task item to Kafka in the Maximum priority topic, forcing nodes that have the affected record to refresh their in-memory data before they start processing other non-Maximum priority tasks.

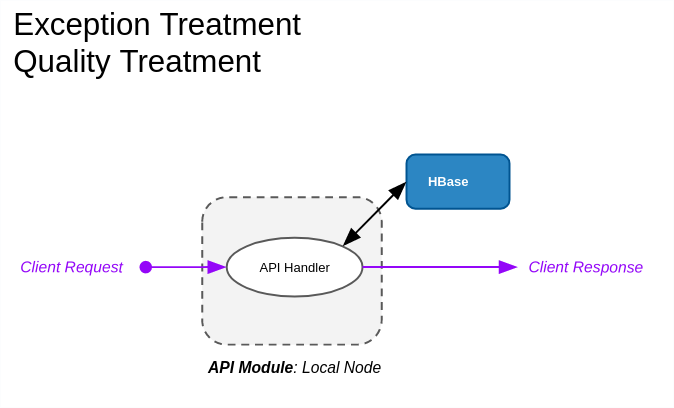

- Exception Treatment: The API handler updates the exception record in HBase.

- Quality Treatment: The API handler updates the transaction record in HBase.

- Get, List: These are read-only requests. The API Handler fetches the requested data from HBase and replies directly to the client application.

1.4.2. Master Module¶

This Module is responsible for starting up (booting) the GBDS Node, managing the cluster state (e.g.: redistributing cluster load when a node fails), and processing biometric transactions.

1.4.2.1. Node Manager¶

This component reads configuration files, starts other components and constantly monitors other cluster nodes, and decides how to redistribute biometric data in the cluster when other nodes fail.

1.4.2.2. Boot Handler¶

This component is responsible for loading biometric templates from HBase into RAM. Efficient biometric matching requires the templates to be present in RAM. Loading the entire biometric database to the cluster nodes’ memory and setting up internal indexes is a time-consuming task, but it ensures fast processing of transactions once the booting process is finished.

1.4.2.3. Task Processing Pipeline¶

The remaining components of the Master Module perform distributed biometric matching.

The Task Consumer component continuously consumes items from the Pending Task topics in Kafka. It always consumes a task from the highest-priority non-empty queue.

The Matcher Supervisor component manages the matcher processes and/or threads (the choice depends on the biometric modality) and performs the biometric matching operations between the query templates in the transaction and the biometric templates present in the memory of the local node. Biometric template matching is not a trivial operation and involves complex algorithms.

The Consolidation Supervisor component organizes the results generated by the matchers and sends them to the Global Consolidator responsible for the current transaction, which may not be running in the local node.

The Global Consolidator component receives matching results from all nodes that contributed to the processing of the task/transaction and generates the complete consolidated matching results. Each task/transaction is Globally consolidated in a single node, deterministically determined by a hash function of the transaction/person identifier. This hash function evenly distributes global consolidation tasks among the available cluster nodes.

Some transactions, such as latent searches in forensic systems, require an additional matching operation to refine and/or reorder the matching results, called Post-Matching. The Post-Matching Supervisor manages the post-matcher processes/threads for such cases, and also runs only in the global consolidation node assigned to the transaction.

The Commit Handler component receives the final results from either the Global Consolidator or the Post-Matching Supervisor and commits the transaction results:

- All changes to the state of the biometric database are committed to HBase.

- If the transaction results require any cluster nodes to refresh their in-memory biometric data (e.g.: a new person was added to the database as a result of an enroll request), an item is pushed to the Maximum-priority Pending Tasks Kafka topic.

- It pushes an item to the Results topic in Kafka, which will be delivered to the client application by the Notifier Module.

1.4.3. Notifier Module¶

This module has one main component, the Notification Handler, which continuously consumes items from the Results Kafka topic and sends HTTP/HTTPS notifications to the client applications, asynchronously informing the status of their requests.

The Notifier Module is a singleton and is active in only one node of the cluster at any given time. The node that runs the Notifier Module is chosen by the system administrator.

1.5. Transaction Workflows¶

This section illustrates how each type of transaction is processed by GBDS.

1.5.1. Identification (1:N)¶

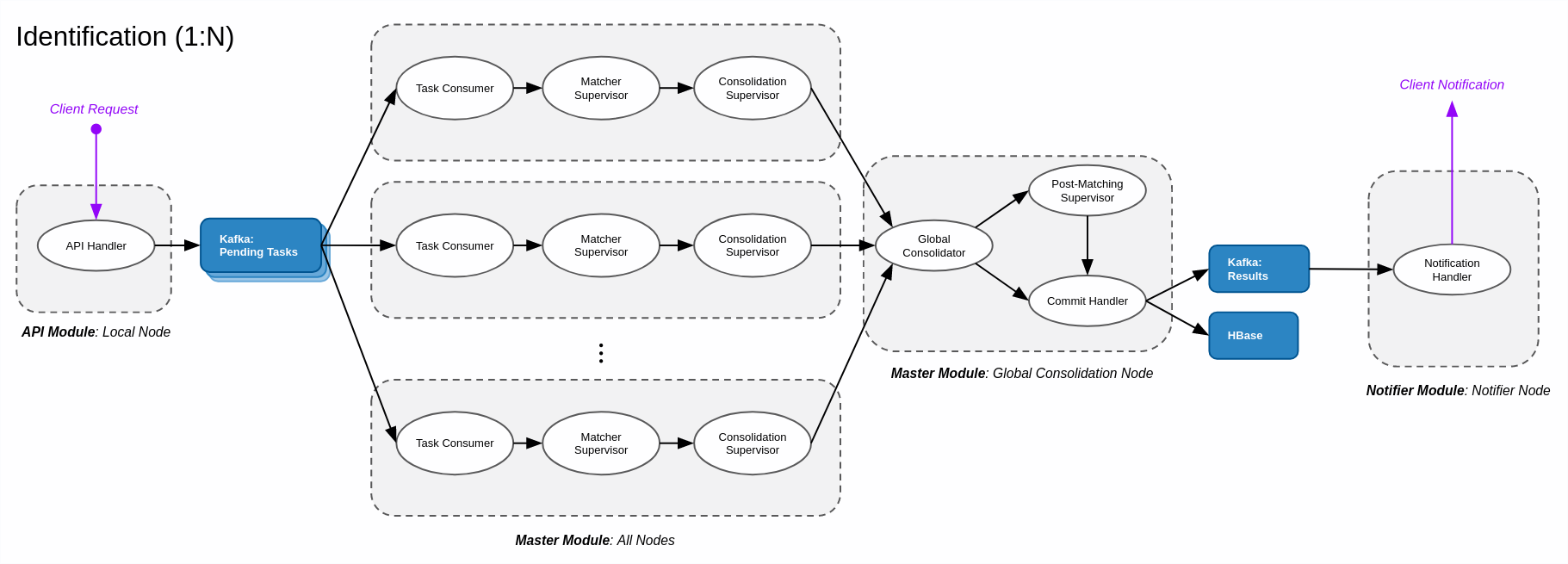

In an identification (1:N search) transaction, the client wants to search the biometric database for matches with query biometric data. The entire database may have to be searched. The API Handler receives the request in the node the request was sent to. If the query data contains raw biometric data (images) instead of templates, the API Handler performs biometric template extraction at this local node. The transaction is then pushed to a Pending Tasks Kafka topic.

All Cluster nodes eventually consume the item from a Pending Tasks topic (Task Consumer module) and perform their part of the biometric search (Matcher Supervisor and Consolidation Supervisor modules). Each node’s results are sent to the Global Consolidator at the Global Consolidator Node, determined by the transaction identifier.

In the Global Consolidator Node, the Global Consolidator module waits for the cluster to finish the search operation and gathers the final results. Post-matching is performed, if required (Post-Matching Supervisor module), and the Commit Handler writes the results to HBase and pushes an item to the Results Kafka Topic.

The Notifier Module singleton, running on the Notifier Node, eventually consumes the associated item in the Results Kafka Topic and sends an asynchronous notification to the client application, informing the conclusion of the transaction.

1.5.2. Enrollment¶

In an Enrollment transaction, the client requests the insertion of a new person in the database, provided that the biometric data is not a duplicate of an existing record. The processing workflow for this transaction is very similar to that of the Identification transaction, as it involves a 1:N search for duplicate records. Since the transaction may require all nodes to refresh their local in-memory templates (to acknowledge the newly added person), the Commit Handler will push a new item to a Pending Tasks Kafka topic with Maximum priority, to force all nodes to refresh. If the transaction generates an exception that requires manual review, it remains suspended until the review is performed by another transaction.

This workflow is also performed when an update transaction adds new biometric data to an existing record.

1.5.3. Verification (1:1), Get, List¶

In a Verification transaction, the client wants to verify whether a query biometric matches a specific person present in the database. This transaction is handled by the API Module in the same node that receives the request. The API Module fetches the person’s biometric data from HBase, performs the biometric matching locally, and responds to the Client synchronously.

Get and List transactions retrieve existing records and/or results from GBDS. These are handled locally by the API Module, which fetches the requested information from HBase and responds to the client synchronously.

1.5.4. Update, Delete¶

In an Update transaction, the client wants to change either biographic or biometric data of an existing record. If new biometric data is being inserted, the transaction follows the flow of enrollment, since the entire database must be searched for duplicates. Otherwise, the API Module performs any required biometric template extraction, updates HBase, synchronously responds to the client, and pushes a new item with Maximum priority to the Pending Tasks Kakfa topic, forcing all cluster nodes to refresh and acknowledge the modified biometric data.

In a Delete transaction, the client requests to remove a person from the database. The API Module performs the removal from HBase and responds synchronously to the client. It also pushes a new item with Maximum priority to the Pending Tasks Kafka topic, forcing all cluster nodes to refresh and acknowledge the deletion.

1.5.5. Exception Treatment, Quality Treatment¶

GBDS manages Exceptions and Quality Control items. These are generated when enrollment transactions find suspected duplicates or update transactions do not find a match between the query and the reference biometrics (Exceptions) and when biometric data with low quality is inserted (Quality Control items). These items may require manual review, depending on GBDS configurations. These transactions update the status of pending Exception Treatment and Quality Control items. The API Handler processes these transactions locally, updates their status in HBase, and responds synchronously to the client.